ACROSS Adaptive Cross-Modal Representation for Robotic Transfer Learning

Coordinator (PI): Nicolás Navarro-Guerrero, L3S Research Center, Leibniz Universität Hannover

Contributors: Nicolás Navarro-Guerrero, and Wadhah Zai El Amri

Start Date: 1st April 2023

End Date: 31st March 2026

A glaring problem in robotics is the re-utilization of data and solutions. While data and code can be shared, they cannot be directly deployed on other robotic systems as they might differ in configuration, performance, sensor array, etc. Similarly, the data cannot be simply aggregated into a more extensive dataset. Hence, training from scratch for a particular robotic system is still the norm.

Tactile perception is a good example of this challenge because tactile data is strongly dependent on the robot hand or gripper used and the type of sensor. Moreover, unlike cameras (RGB), tactile sensors have no standard data representation. Additionally, tactile and proprioceptive data are intrinsically active, i.e., the explorative movements are key for recognition.

This project aims to develop a unified representation of tactile data. Such representation could allow efficient and effective robotic systems to operate across diverse modalities and robots. Another aim is to reuse knowledge from other robotic systems. The outcomes of this project aim to improve object recognition, object manipulation, and robot dexterity. However, the applications of this type of transfer and representation learning time go beyond these use cases and robotics applications.

Project Partners:

Related Publications:

-

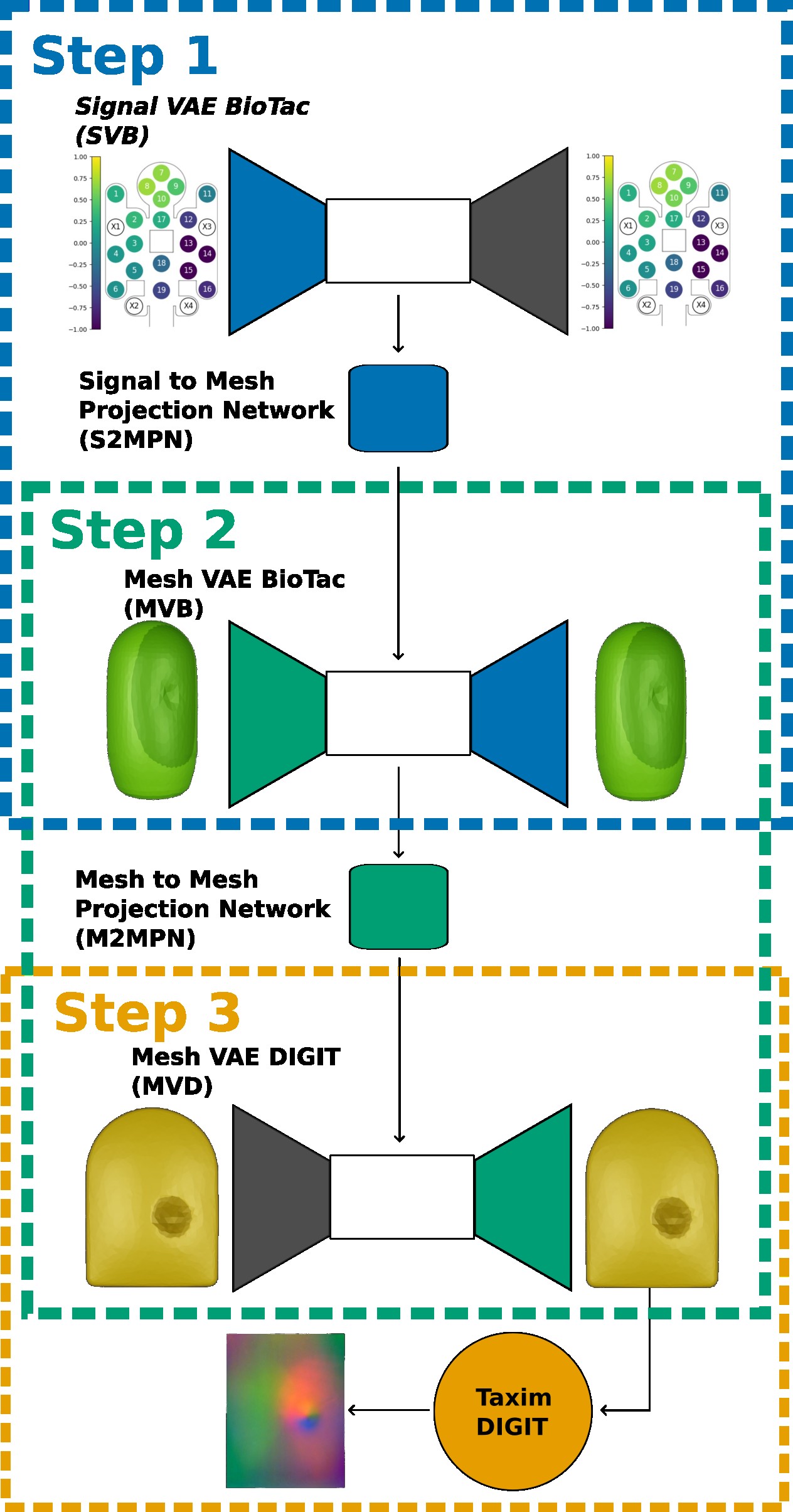

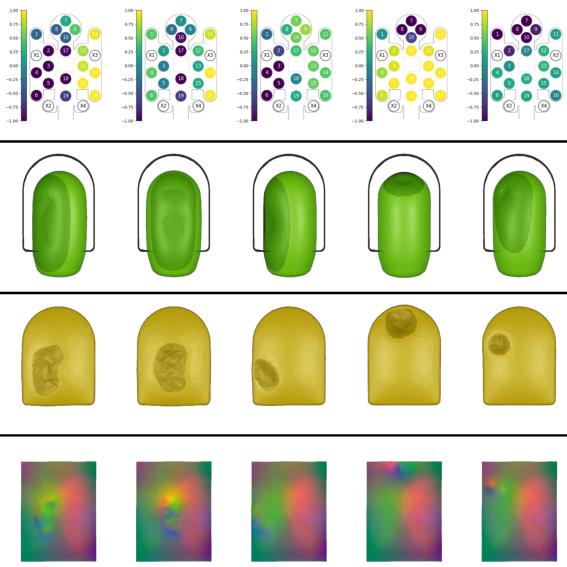

ACROSS: A Deformation-Based Cross-Modal Representation for Robotic Tactile Perception

IEEE International Conference on Robotics and Automation (ICRA). pp. 5836-5842. Atlanta, GA, USA. May 2025. Zai El Amri, Wadhah; Kuhlmann, Malte; Navarro-Guerrero, Nicolás

DOI, PDF, URL, bib file. bibkey: ZaiElAmri2025ACROSS Supplementary material ©2025 IEEE The Authors. -

Transferring Tactile Data Across Sensors

40th Anniversary of the IEEE Conference on Robotics and Automation (ICRA). pp. 1540-1542. Rotterdam, The Netherlands. Sep 2024. Zai El Amri, Wadhah; Kuhlmann, Malte; Navarro-Guerrero, Nicolás

DOI, PDF, URL, bib file. bibkey: ZaiElAmri2024Transferring ©2024 IEEE The Authors. -

Optimizing BioTac Simulation for Realistic Tactile Perception

International Joint Conference on Neural Networks (IJCNN). pp. 1-8. IEEE World Congress on Computational Intelligence (IEEE WCCI). Yokohama, Japan. Jul 2024. Zai El Amri, Wadhah; Navarro-Guerrero, Nicolás

DOI, PDF, URL, bib file. bibkey: ZaiElAmri2024Optimizing Supplementary material ©2024 IEEE The Authors. -

Visuo-Haptic Object Perception for Robots: An Overview

Autonomous Robots. vol. 47, no. 4, pp. 377–403. Apr 2023. Navarro-Guerrero, Nicolás; Toprak, Sibel; Josifovski, Josip; Jamone, Lorenzo

DOI, PDF, URL, bib file. bibkey: Navarro-Guerrero2023VisuoHaptic ©2023 Springer US The Authors.